If a skeptic contending an argument cannot cite exception observations to his case, tends to get ugly when anyone cites dissent, does not practice an awareness of their own weaknesses, and possesses a habitual inability to hold a state of epoché and declare “I don’t know;” then be very wary. Because that person may be trying to sell you something, promote an agenda or may even indeed be lying.

There are four indicators I look for in a researcher who is objective and sufficiently transparent enough to be trusted in the handling of the Knowledge Development Process (Skepticism and Science). These are warning flags to watch for, which I have employed in my labs and businesses for decades. They were hard earned lessons about the nature of people, but have proven very helpful over the years.

1. The demonstrated ability to neutrally say “I do not have an answer for that” or “I do not know”

One of the ways to spot a fake skeptic, or at a professional level, those who pretend to perform and understand science – is that the pretender tends to have a convenient answer for just about everything. Watch the Tweets of the top 200 narcissistic Social Skeptics and you will observe (aside from their all tweeting the exact same claims 5 minutes after the previous tweet of verbatim contention) the clear indication that there exists not one pluralistic topic upon which they are not certain of the answer. Everything has a retort, one liner, or club recitation. Your dissent on the issue is akin to attacking them personally. They get angry and attack you personally as the first response to your objection. Too many people fall for this ruse. In this dance of irrationality passed off as science, factors of personal psychological motivation come into play and serve to countermand objective thinking. This can be exposed though a single warning flag indicating that the person you are speaking with might not be trustworthy. An acquaintance of mine, who is a skeptic, was recently faced with a very challenging paradigm shaking observation set. I will not go into what it was, as siding with issues of plurality is not the purpose of this blog. The bottom line was that as I contended “Ken, this is an excellent opportunity to make observations around this phenomenon to the yeah or nay, damn you are lucky,” he retorted “No, I really would rather not. I mean not only is it a waste of time, but imagine me telling the people at work ‘Oh, I just went to ___________ last night and took a look at what was happening.’ That would not go over well, and besides it really is most likely [pareidolia] and will be a distraction.” Ken in this short conversation, revealed an abject distaste for the condition of saying ‘I do not know.’ His claim to absolute knowledge on this topic over the years, revealed to be simply a defense mechanism motivated by fear. Fear of the phenomena itself. Fear of his associates. Fear of the state of unknowing.

One of the ways to spot a fake skeptic, or at a professional level, those who pretend to perform and understand science – is that the pretender tends to have a convenient answer for just about everything. Watch the Tweets of the top 200 narcissistic Social Skeptics and you will observe (aside from their all tweeting the exact same claims 5 minutes after the previous tweet of verbatim contention) the clear indication that there exists not one pluralistic topic upon which they are not certain of the answer. Everything has a retort, one liner, or club recitation. Your dissent on the issue is akin to attacking them personally. They get angry and attack you personally as the first response to your objection. Too many people fall for this ruse. In this dance of irrationality passed off as science, factors of personal psychological motivation come into play and serve to countermand objective thinking. This can be exposed though a single warning flag indicating that the person you are speaking with might not be trustworthy. An acquaintance of mine, who is a skeptic, was recently faced with a very challenging paradigm shaking observation set. I will not go into what it was, as siding with issues of plurality is not the purpose of this blog. The bottom line was that as I contended “Ken, this is an excellent opportunity to make observations around this phenomenon to the yeah or nay, damn you are lucky,” he retorted “No, I really would rather not. I mean not only is it a waste of time, but imagine me telling the people at work ‘Oh, I just went to ___________ last night and took a look at what was happening.’ That would not go over well, and besides it really is most likely [pareidolia] and will be a distraction.” Ken in this short conversation, revealed an abject distaste for the condition of saying ‘I do not know.’ His claim to absolute knowledge on this topic over the years, revealed to be simply a defense mechanism motivated by fear. Fear of the phenomena itself. Fear of his associates. Fear of the state of unknowing.

It struck me then, that Ken was not a skeptic. It was more comfortable to conform with pat answers and to pretend to know. He employed SSkepticism as a shield of denial, in order to protect his emotions and vulnerabilities. The Ten Pillars hidden underneath the inability to say “I do not know” can serve as the underpinning to fatal flaws in judgement. Flaws in judgement which might come out on the battlefield of science, at a time most unexpected. Flaws in judgement which might allow one to fall prey to a verisimilitude of fact which misleads one into falsely following a SSkeptic with ulterior motives or influences, were you not the wiser.

The Ten Pillars which hinder a person’s ability to say “I don’t know” are:

I. Social Category and Non-Club Hatred II. Narcissism and Personal Power

III. Promotion of Personal Religious Agenda IV. Emotional Psychological Damage/Anger V. Overcompensation for a Secret Doubt

VI. Fear of the Unknown

VII. Effortless Argument Addiction VIII. Magician’s Deception Rush

IX. Need to Belittle Others X. Need to Belong/Fear of Club Perception

These are the things to watch out for in considering the advice of someone who is promoting themselves as a skeptic, or even as a science professional; one key little hint to be observed inside the ability of an individual to dispassionately declare “I don’t know” or “I do not have an answer for that” on a challenging or emotional subject.

2. An innate eschewing of hate and lack of a quick temper

One of the key warning flags of a pretense, especially in regards to the ability to handle a pluralistic topic, is the characteristic way in which a person handles diversity, conflict or disagreement. As the flow of lava from a volcano is a small trickle, indicative of a large movement of magma field below, even so are the telltale clues of hatred and disdain – the key warning flags of a person’s inability to handle a topic with objectivity. An angry person, or a person filled with hate, will always struggle with objectivity. Some hate consists of repressed unpopular prejudice, long correctly eschewed by society, while other hatreds are simply current popular themes, wherein society smiles and looks the other way. A study in 1999 published by Bruce Hunsberger, revealed partial correlations indicating that authoritarianism was a reliable predictor of sexism/gender hatred, moreover relating further still to prejudice and religious irrationality.¹ When one forces their religion on others, right wing or not, and cites this ontology as being amplified or justified because of the victim’s gender or ethnicity, be very cautious. Either way, hate is hate, and is indicative of a fallow heart which will trade justice for hubris; truth for even the warm satisfaction of an error.

One of the key warning flags of a pretense, especially in regards to the ability to handle a pluralistic topic, is the characteristic way in which a person handles diversity, conflict or disagreement. As the flow of lava from a volcano is a small trickle, indicative of a large movement of magma field below, even so are the telltale clues of hatred and disdain – the key warning flags of a person’s inability to handle a topic with objectivity. An angry person, or a person filled with hate, will always struggle with objectivity. Some hate consists of repressed unpopular prejudice, long correctly eschewed by society, while other hatreds are simply current popular themes, wherein society smiles and looks the other way. A study in 1999 published by Bruce Hunsberger, revealed partial correlations indicating that authoritarianism was a reliable predictor of sexism/gender hatred, moreover relating further still to prejudice and religious irrationality.¹ When one forces their religion on others, right wing or not, and cites this ontology as being amplified or justified because of the victim’s gender or ethnicity, be very cautious. Either way, hate is hate, and is indicative of a fallow heart which will trade justice for hubris; truth for even the warm satisfaction of an error.

I do not trust the cosmology of racists, those who conceal a latet misandry or misogyny, nor the eschatology of those who yearn for the destruction of the Earth or certain races. In my life I have spent years in disadvantaged regions of Africa and the Middle East, working for the peoples of these regions on clean water, medical and food initiatives. Hate breeds disdain and greed, which breeds corruption by process, which breeds control, which breeds suffering. This all stems from hate. Gender, tribal, racial, religious, political and social. It is all hate, and accomplishes the same exact results, every time.

I do not trust the cosmology of racists, those who conceal a latet misandry or misogyny, nor the eschatology of those who yearn for the destruction of the Earth or certain races. In my life I have spent years in disadvantaged regions of Africa and the Middle East, working for the peoples of these regions on clean water, medical and food initiatives. Hate breeds disdain and greed, which breeds corruption by process, which breeds control, which breeds suffering. This all stems from hate. Gender, tribal, racial, religious, political and social. It is all hate, and accomplishes the same exact results, every time.

Watch for the ability in a person to understand and curate their character, of both acceptable and unacceptable forms of hatred and disdain, with this key tenet of human existence. A person who sees hate as hate, regardless of how acceptable/justifiable it is, is a person who demonstrates the earmarks of trustworthiness.

3. A balanced perspective CV of pertinent past personal mistakes and what one has learned from them

I cringe when I review my past mistakes, those both personal and professional. My cringing is not so much over mistakes from lack of knowledge, as in the instance of presuming for example that a well recognized major US Bank would be ethical and not steal for themselves and their hedge/mutual fund cronies, 85% of my parent’s retirement estate once it was put into an Crummy Irrevocable Trust. Some mistakes come from being deceived by others or from a lack of knowledge or an inability to predict markets. But what is more important in this regard, is both the ethical recall of and recollection of how we handle mistakes we commit from missteps in judgement. Those are the anecdotes I maintain on my mistake resume. For instance, back in the 90’s I led research team on a long trek of predictive studies supporting a particular idea regarding the interstitial occupancy characteristics of an alpha phase substance. I was so sure of the projected outcome, that my team missed almost a year of excellent discovery and falsifying work, which could have shed light on this interstitial occupancy phenomenon. It was a Karl Popper moment, my gold fever over a string of predictive results, misled my thinking into a pattern of false understanding as to what was occurring in the experiments. It was only after a waste of time and a small sum that my colleague nudged me with a recommendation that we shift focus a bit. I had to step off that perch, and realize that a simple falsification test could have elicited an entirely new, more accurate and enlightening pathway. Again I did this in an economic analysis of trade between Asia and the United States. I missed for months an important understanding of trade, because I was chasing a predictive evidence set and commonly taught MBA principles relating costs and wealth accrual in trade transactions. I earned two very painful stripes on my arm from those mistakes, and today – I am keenly aware of the misleading nature of predictive studies – observing a SSkeptic community in which deception by such Promotification pseudo-science runs pandemic.

I cringe when I review my past mistakes, those both personal and professional. My cringing is not so much over mistakes from lack of knowledge, as in the instance of presuming for example that a well recognized major US Bank would be ethical and not steal for themselves and their hedge/mutual fund cronies, 85% of my parent’s retirement estate once it was put into an Crummy Irrevocable Trust. Some mistakes come from being deceived by others or from a lack of knowledge or an inability to predict markets. But what is more important in this regard, is both the ethical recall of and recollection of how we handle mistakes we commit from missteps in judgement. Those are the anecdotes I maintain on my mistake resume. For instance, back in the 90’s I led research team on a long trek of predictive studies supporting a particular idea regarding the interstitial occupancy characteristics of an alpha phase substance. I was so sure of the projected outcome, that my team missed almost a year of excellent discovery and falsifying work, which could have shed light on this interstitial occupancy phenomenon. It was a Karl Popper moment, my gold fever over a string of predictive results, misled my thinking into a pattern of false understanding as to what was occurring in the experiments. It was only after a waste of time and a small sum that my colleague nudged me with a recommendation that we shift focus a bit. I had to step off that perch, and realize that a simple falsification test could have elicited an entirely new, more accurate and enlightening pathway. Again I did this in an economic analysis of trade between Asia and the United States. I missed for months an important understanding of trade, because I was chasing a predictive evidence set and commonly taught MBA principles relating costs and wealth accrual in trade transactions. I earned two very painful stripes on my arm from those mistakes, and today – I am keenly aware of the misleading nature of predictive studies – observing a SSkeptic community in which deception by such Promotification pseudo-science runs pandemic.

Mistakes made good are the proof in the pudding of our philosophy. I can satisfy my Adviser with recitations or study the Stanford Encyclopedia of Philosophy for years; but unless I test at least some of the discovered tenets in my research life, I am really only an intellectual participant. Our mistakes are the hashmarks, the stripes we bear on the sleeves of wisdom and experience. Contending that “Oh I was once a believer and now I am a skeptic” as does Michael Shermer in his heart tugging testimony about his errant religious past and his girlfriend, is not tantamount to citing mistakes in judgement. That is an apologetic explaining how one does not make mistakes, only others do. This is not an objective assessment of self, nor the carrying a CV of lessons learned. Michael learned nothing in the experience, and rather the story simply acts as a confirmation moral; a parable to confirm his religious club. Other people made the mistake, he just caught on to it as he became a member of the club. This type of ‘mistake’ recount is akin to saying “My only mistake was being like you.” That is not an objective assessment of personal judgement at all. It is arrogance, belied by a fake cover of objectivity and research curiosity.

Watch for this sign in lack of objectivity, especially the fake-mistake kind. It can serve to be extraordinarily illuminating.

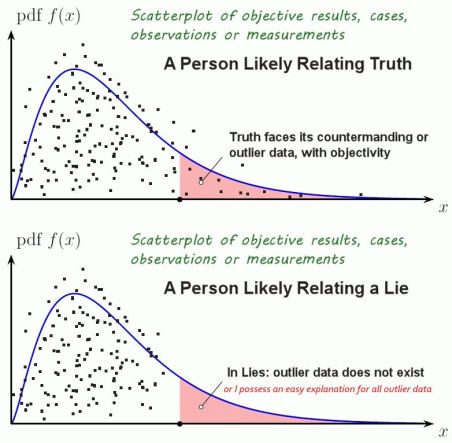

4. A reasonable set of statistical outliers in their theory observation set

Outliers call out liars. This is a long held, subtle tenet of understanding which science lab managers employ to watch for indications of possible group think, bias or bandwagon effect inside their science teams. Not that people who commit such errors are necessarily liars, but we are all prone to undertake the habit of observational bias, the tendency to filter out information which does not fit our paradigm or objective of research. Compound this with the human habit of accepting/excusing one’s own argument unknowns and conflicting data, while at the same citing unknown and conflicting data as fatal in another’s argument. In this hypocritical mechanism you might observe the beginning workings of a dogma.

Outliers call out liars. This is a long held, subtle tenet of understanding which science lab managers employ to watch for indications of possible group think, bias or bandwagon effect inside their science teams. Not that people who commit such errors are necessarily liars, but we are all prone to undertake the habit of observational bias, the tendency to filter out information which does not fit our paradigm or objective of research. Compound this with the human habit of accepting/excusing one’s own argument unknowns and conflicting data, while at the same citing unknown and conflicting data as fatal in another’s argument. In this hypocritical mechanism you might observe the beginning workings of a dogma.

Chekhov’s Gun

/philosophy : rhetoric : prevarication/ : a dramatic principle that states that every element in a fictional story must be necessary to support the plot or moral, and irrelevant elements should be excluded. It is used as part of a method of detecting lies and propaganda. In a fictional story, every detail or datum is supportive of, and accounted for, as to its backing of the primary agenda/idea. A story teller will account in advance for every loose end or ugly underbelly of his moral message, all bundled up and explained nicely – no exception or tail conditions will be acknowledged. They are not part of the story.

One way to spot this is to be alert for the presence and nature of and method by which exceptions and outliers are handled in the field of data being presented in an argument. Gregory Francis, professor of psychological sciences at Purdue University, has employed the professional observation of critiquing studies in which the resulting signals are “too good to be true.” Francis has been known to critique psychology papers in this regard. His reviews are based on the principle that experiments, particularly those with relatively small sample sizes, are likely to produce “unsuccessful” findings or outlier data, even if the experiments or observation are supporting a credible and measurable phenomenon.² Now, as a professional who conducts hypothesis reduction hierarchy in their work, I know that it is a no-no to counter inception stage falsifying work with countermanding predictive theory – a BIG no no. This is a common tactic of those seeking to squelch a science. There is a time and a place in the scientific method for the application of predictive data sets, and that is not at Sponsorship Ockham’s Razor Peer Input step. In other words, I professionally get upset when a new science is shot at inception. I want to see the falsifying data, not the opposition’s opinion. So set aside the error Greg Francis is making here in terms of the scientific method inside Epigenetics, and rather focus on the astute central point that he is making, which is indeed correct.

“If the statistical power of a set of experiments is relatively low, then the absence of unsuccessful results implies that something is amiss with data collection, data analysis, or reporting,” – Dr. Greg Francis, Professor of Psychology, Purdue University²

An absence of outlier data can be a key warning flag that the person offering the data is up to something. They are potentially falling prey to an observer’s bias, some form of flaw in their positioning of the observation set, or even in purposeful deception – as is the case with many of our Social Skeptics. Indications that the person is adhering to a doctrine, a social mandate, a personal religious bias, or a furtive agenda passed to them by their mentors.

Be circumspect and watch for how a self purported skeptic handles outlier data.

Be circumspect and watch for how a self purported skeptic handles outlier data.

- Do they objectively view themselves, the body of what is known, and the population of observations and data?

- Do they simply spin a tale of complete correctness without exception?

- Do they habitually begin explanatory statements with comprehensive fatalistic assessments or claims to inerrant authority without exception?

- Do they bristle at the introduction of new data?

- Do they cite perfection of results inside an argument of prospective or well established plurality?

- Do they even mock others who cite outlier data?

- Are all people who are unlike them, all stupid?

- Do they attack you if you point this out?

If so be wary. Michael Shermer terms this outlying group of countermanding ideas, data and persons the “Dismissible Margin.” It is what SSkeptics practice in order to prepare a verisimilitude of neat little simple arguments. These are key warning indicators that something is awry. If a skeptic cannot acknowledge their own biases, the outlier data itself, nor do they possess the ability to say “I don’t know;” they may very well be lacking in the ability to command objective thinking. They may very well be lying. Lying in the name of science.

¹ Hunsberger, Bruce; Owusu, Vida; and Duck, Robert. “Religion and Prejudice in Ghana and Canada: Religious Fundamentalism, Right-Wing Authoritarianism, and Attitudes Toward Homosexuals and Women.” International Journal for the Psychology of Religion 9 (1999): 181-195.

² Epigenetics Paper Raises Questions, The Scientist, Kate Yandell, October 16, 2014; http://www.the-scientist.com/?articles.view/articleNo/41239/title/Epigenetics-Paper-Raises-Questions/