When a Social Skeptic cites scientific consensus, ask them what polling method was employed and what comparative reduction was utilized on that sample data. The vast majority of them will be unable to answer this question, because in reality they do not care; the ‘evidence’ serves the purpose and needs no further scrutiny. Passive aggregation studies based on equivocal concepts, Bradley Effect, and situational sampling of inherently biased venues, constrained by control of the response of the natural pivot group inside polled science will only result in serving those who hold an agenda and wish to spin a consensus.

Ever wonder where Social Skeptics get these imperious and unquestionable claims to scientific consensus? Well, basically surveys are conducted inside the various American science associations (such as the American Academy of Sciences or American Academy of Neurology). Now there is not a problem with this collection means, as these professional organizations should make all attempts to collect and understand the opinions of their members. In addition, the trustworthiness of the tally involved in these professional association numbers generally does not fall into question. But exactly how is the broader community of scientists polled in order to determine what these organizations consider to be accurate or valid science? And how are the results analyzed, digested and communicated? Therein resides the rub. The habit of pseudo-professionally spinning statistics falsely into predictive and associative ‘evidence’ (see Sorwert Level I SSkeptics) is a very familiar technique to those who observe our cultural academic promotion of cheating through institution and process. There exist three primary problems which reveal many specious claims of ‘scientific consensus’ to be in reality, a prejudicial result of non-sense data gathering, and propaganda dissemination. Let’s examine these industry problems.

Ever wonder where Social Skeptics get these imperious and unquestionable claims to scientific consensus? Well, basically surveys are conducted inside the various American science associations (such as the American Academy of Sciences or American Academy of Neurology). Now there is not a problem with this collection means, as these professional organizations should make all attempts to collect and understand the opinions of their members. In addition, the trustworthiness of the tally involved in these professional association numbers generally does not fall into question. But exactly how is the broader community of scientists polled in order to determine what these organizations consider to be accurate or valid science? And how are the results analyzed, digested and communicated? Therein resides the rub. The habit of pseudo-professionally spinning statistics falsely into predictive and associative ‘evidence’ (see Sorwert Level I SSkeptics) is a very familiar technique to those who observe our cultural academic promotion of cheating through institution and process. There exist three primary problems which reveal many specious claims of ‘scientific consensus’ to be in reality, a prejudicial result of non-sense data gathering, and propaganda dissemination. Let’s examine these industry problems.

Fabutistic

/fab-yu-’tis-tic/ : a statistic which is speciously cited from a study or set of skeptical literature, around which the recitation user misrepresents its employment context or possesses scant idea as to what it means, how it was derived, or what it is saying or is indeed not saying. Includes the instance wherein a cited statistic is employed in a fashion wherein only the numerals are correct (ie. “97%”) and the context of employment is extrapolated, hyperbole or is completely incorrect.

I. Collection Samples are Constrained to a Small Skewed or Invalid Subsets of Scientists

The first problem is that only a small potentially biased portion of the scientific community is ever polled. Now, when one executes confidence interval assessments on sampling measures, it is often surprising at how a converging confidence can be established on a very small sample (often 2 – 5%) of the comprehensive population being measured. But this only occurs when the sampling is conducted in an unbiased fashion. When samples are conducted in biased ways or in skewed avenues of collection, more diligence must be undertaken. Let’s look at how that affects the accuracy of a prospective claim to consensus. Scientific consensus, as expressed by Wikipedia:

“Consensus is normally achieved through communication at conferences, the publication process, replication (reproducible results by others), and peer review.”¹

A process of consensus can suffer from a vulnerability in hailing only science developed under what is called a Türsteher Mechanism, or bouncer effect. A process producing a sticky but unwarranted prejudice against specific subjects. The astute researcher must ever be aware of the presence of this effect, before accepting any claims to consensus.

Türsteher Mechanism

/philosophy : science : pseudoscience : peer review bias/ : the effect or presence of ‘bouncer mentality’ inside journal peer review. An acceptance for peer review which bears the following self-confirming bias flaws in process:

- Selection of a peer review body is inherently biassed towards professionals who the steering committee finds impressive,

- Selection of papers for review fits the same model as was employed to select the reviewing body,

- Selection of papers from non core areas is very limited and is not informed by practitioners specializing in that area, and

- Bears an inability as to how to handle evidence that is not gathered in the format that it understands (large scale, hard to replicate, double blind randomized clinical trials or meta-studies).

Therein such a process, the selection of initial papers is biased. Under this flawed process, the need for consensus results in not simply attrition of anything that cannot be agreed upon – but rather, a sticky bias against anything which has not successfully passed this unfair test in the past. An artificial and unfair creation of a pseudoscience results.

So consensus measures are drawn, not from the whole body of scientists, rather

- those who’s universities/sponsors pay the $3000 to $9000 to attend various annual professional conferences,¹ – (small – skewed)

- those involved in the publication process (see example below)² – (very small – skewed)

- those involved in the discipline providing replication and peer review¹ – (miniscule – biased)

Now certainly, these are the science professionals who should be counted first in the ‘consensus measuring’ process. Of this there is no doubt. But does this measurement approach really reflect the consensus opinion of scientists as a whole, as claimed by Social Skeptics? Rarely, to almost never statistically. I know that when I attend conferences, like many professionals, I only fill out the surveys if they pertain to an issue about which I feel passionate. If I am speaking or have a scheduled breakout session with a group, I really could care less about the professional surveys. And like me at a conference, most of the individuals in the three categories above, already have a vested interest in the science under consideration in the poll to begin with. So of course they are more likely to be supportive of the current popular emphasis inside their own avenue of research, at conferences and inside publication circles. Polling them is a bit like polling ex and future National Football League players at an NFL Draft Convention as to whether or not they believe that athletic budgets should be increased at the collegiate level. Of course the overwhelming number of respondents are going to be all for it. Then we publish the headline “Vast consensus of athletes are for increased college athletic budgets.” This is spin, and it is a regular course of action in this ‘consensus telling’ process on the part of Social Skepticism. Even if we did undertake the discipline of establishing a confidence interval around such collection measures, which is rarely done, the collection process would have simply been a charade. This is most often the reality.

Now certainly, these are the science professionals who should be counted first in the ‘consensus measuring’ process. Of this there is no doubt. But does this measurement approach really reflect the consensus opinion of scientists as a whole, as claimed by Social Skeptics? Rarely, to almost never statistically. I know that when I attend conferences, like many professionals, I only fill out the surveys if they pertain to an issue about which I feel passionate. If I am speaking or have a scheduled breakout session with a group, I really could care less about the professional surveys. And like me at a conference, most of the individuals in the three categories above, already have a vested interest in the science under consideration in the poll to begin with. So of course they are more likely to be supportive of the current popular emphasis inside their own avenue of research, at conferences and inside publication circles. Polling them is a bit like polling ex and future National Football League players at an NFL Draft Convention as to whether or not they believe that athletic budgets should be increased at the collegiate level. Of course the overwhelming number of respondents are going to be all for it. Then we publish the headline “Vast consensus of athletes are for increased college athletic budgets.” This is spin, and it is a regular course of action in this ‘consensus telling’ process on the part of Social Skepticism. Even if we did undertake the discipline of establishing a confidence interval around such collection measures, which is rarely done, the collection process would have simply been a charade. This is most often the reality.

For an outline of the nine ways in which pollsters purposely manipulate polls to their liking, see A Word About Polls.

Unethically Biased Samples, Not Just Skewed

There is a fourth very popular venue however, through which consensus is collected, which Wikipedia totally skipped. That of polling members of ‘scientific’ organizations. One such example can be found with regard to the poster child organization for falsely contended statistics of consensus, the American Association for the Advancement of Science (AAAS). Commonly touted by consensus spinners as being an ‘organization of scientists,’ it alarmingly and disconcertingly, is not.

“The claim of ‘consensus’ has been the first refuge of scoundrels; it is a way to avoid debate by claiming that the matter is already settled…” ~Michael Crichton

The charter and policy declarations from the AAAS clearly delineate it as a social activism group. This is far from a valid basis from which to make the claim that one has sampled an opinion representative of scientists. This is the opinion of ONE BIASED ORGANIZATION. Their charter as a social activist organization is to influence, intimidate and bypass the public trust in an attempt to influence the government. A right granted solely to the American Public, stolen by a special interest. And finally, to demonstrate the non-applicability of ‘consensus’ claims stemming from this organization, from its 1973 AAAS CONSTITUTION (Amended):∈

Article III. Membership and Affiliation

Section 1. Members. Any individual who supports the objectives of the Association and is willing to contribute to the achievement of those objectives is qualified for membership.∋

Let us put the AAAS membership stipulations in their objective (less equivocal-more accurate) form:

To be a member scientist of the AAAS you must support the objectives of the AAAS and must contribute your vote on issues in the way in which they urge. Otherwise you cannot be a member.

This series of declarations show that, in order to join the AAAS, one must support the advocacy goals of the organization, and does not actually have to be a scientist. But if you are a scientist member, you can only vote one way. This is a self regulating requirement and calls into high question the contention that the AAAS, and many similar consensus deriving institutions in any way represent the consensus opinion of all scientists.

II. Fabutistics are Derived from Activism Biased Sample Pools, Employ Equivocal Measures and Bad Methods and are Spun out of Context

But let’s presume our claim to consensus is issued by one of the more ethical media houses or journalists, and retract the sample domain of scientists back to the Wikipedia conservative (ahem… *wink) 3-group representation of how consensus is measured. Of the three measure groups above, certainly key among them are those who actually have published work regarding the matter at hand (bullet point 2 above). Regarding consensus measuring among those who are involved in the publication process, a study which stands exemplary of fabutistic spinning is a celebrated industry survey published in IOP Science recently, measuring the levels of consensus around Anthropogenic Global Warming (AGW). The Abstract reads:

“We analyze the evolution of the scientific consensus on anthropogenic global warming (AGW) in the peer-reviewed scientific literature, examining 11, 944 climate abstracts from 1991–2011 matching the topics ‘global climate change’ or ‘global warming’. We find that 66.4% of abstracts expressed no position on AGW, 32.6% endorsed AGW, 0.7% rejected AGW and 0.3% were uncertain about the cause of global warming. Among abstracts expressing a position on AGW, 97.1% endorsed the consensus position that humans are causing global warming.”²

Here, despite the real measure that two thirds of the researchers on global climate change expressed no position on AGW, the ones which did, almost unanimously endorsed AGW. This is an inherently skewed measure (I am an AGW proponent so this is not an argument against it, it simply stands as an example critique of bad method and credenda based reporting). In the advocacy set we have a guarantee of a given threshold of bias, coupled with the fact that there exists a disincentive towards the antithetical set of studies. If I have made measures which showed evidence against AGW, am I going to publish this data in the current political climate? Hell no! I would be a fool to do something like this and trash my career in science. If I did indeed dissent, I would join the 66.4% and tender no opinion. In other words, be a skeptic. This assembly of stats is a key example of ethics: right answer most likely on AGW, but a highly unethical method employed to support my conclusion. And as one of my favorite graduate professors would have said, “Mr. K. you got the right answer by coincidence but the method is terribly wrong, so sorry no credit on this one.” In other words, to sum up the above recitation from the IOP Science article:

Here, despite the real measure that two thirds of the researchers on global climate change expressed no position on AGW, the ones which did, almost unanimously endorsed AGW. This is an inherently skewed measure (I am an AGW proponent so this is not an argument against it, it simply stands as an example critique of bad method and credenda based reporting). In the advocacy set we have a guarantee of a given threshold of bias, coupled with the fact that there exists a disincentive towards the antithetical set of studies. If I have made measures which showed evidence against AGW, am I going to publish this data in the current political climate? Hell no! I would be a fool to do something like this and trash my career in science. If I did indeed dissent, I would join the 66.4% and tender no opinion. In other words, be a skeptic. This assembly of stats is a key example of ethics: right answer most likely on AGW, but a highly unethical method employed to support my conclusion. And as one of my favorite graduate professors would have said, “Mr. K. you got the right answer by coincidence but the method is terribly wrong, so sorry no credit on this one.” In other words, to sum up the above recitation from the IOP Science article:

“97% (97.1%, don’t forget the .1%) of those who went looking for a specific answer, found that specific answer. Amazing how that works. Two thirds of those who study that same subject in general dissented or refused to comment.”

…This is not science, not even close. A similar fabutistic could also be assembled from this study result, and used by the opposition:

“Two Thirds of Global Climate Change researchers are skeptical of AGW.”

Actually 66.4% of scientists who studied the very issue in this instance, indeed had no comment. I find that to be a problematically large number, but am hesitant to spin any stats off it. Either of the equivocal positions above rankle my feathers with regard to misrepresentation. But more commonly you will see this “97% of scientists” fabutistic inexpertly wielded at so-called ‘evidence based’ skeptic sites which don’t bear the first clue in understanding how it was derived, what it means, and what it does not say. The statistic then is imperiously employed by a number of regurgitation outlets in order to squelch free speech.

This is much akin to polling those who have filed burglary reports with the local police department and polling them as to whether or not they are in favor of increased law enforcement budgets. Of course 97% of this group is going to be in favor of something, which stands as the crucial concept entailed in what they just filed as a report. Possibly the right answer from a taxation standpoint, but the wrong method of deriving consent of the governed. Moreover, what if we polled the broader community, those being taxed locally, as to whether they wanted an increase in law enforcement budgets? And in order for them to file their opinion they had to hand their poll sheets in person to the people who had filed burglary reports over the last year? This is how nonsensical the science ‘consensus’ process of measurement is in reality.

This is much akin to polling those who have filed burglary reports with the local police department and polling them as to whether or not they are in favor of increased law enforcement budgets. Of course 97% of this group is going to be in favor of something, which stands as the crucial concept entailed in what they just filed as a report. Possibly the right answer from a taxation standpoint, but the wrong method of deriving consent of the governed. Moreover, what if we polled the broader community, those being taxed locally, as to whether they wanted an increase in law enforcement budgets? And in order for them to file their opinion they had to hand their poll sheets in person to the people who had filed burglary reports over the last year? This is how nonsensical the science ‘consensus’ process of measurement is in reality.

The valid way to measure scientific consensus and avoid the boast of pretending to have measured it, is to employ the ethical voting disciplines of Borda Count style of poll vote along with a Condorcet Binary Matchup reduction imbedded into the survey system. After all, when we execute hypothesis reduction inside the scientific method, we are in essence conducting a Condorcet theory reduction anyway. This is science. Why not use actual science to gauge the opinion of scientists? This allows for those in pivot groups, who are simply going with the flow, to highlight secondary theory which might emerge as popular among scientists in the binary iterations.† This without the social pressure from SSkeptics, or specious results stemming from the ‘studying of studies’ (above²) among climate science/voters all of which were not aware that their study would be treated as ‘votes’ to underpin a completely different context and contention at a later date.

An integrity based vote and rank process establishes true opinion, avoiding the pitfalls of passive aggregation approaches based on equivocal concepts and situational sampling of inherently biased venues. As is practiced in the study above, much equivocation can be spun with subjective measures under the moniker of “endorsed.” When a Social Skeptic cites scientific consensus, ask them what polling method was employed and what comparative reduction was utilized on that sample data. The vast majority of them will be unable to answer this question, because in reality they do not care; the ‘evidence’ serves the purpose and needs no further scrutiny. Elimination of these disciplines is the leverage Napoleon Bonaparte used to skew voting inside the French Academy of Sciences in 1801, and assume its presidency. Social Skeptics utilize this exact same revisionist approach to “polling” today to establish a Bonapartesque rule over the perceptions of science.³

III. The Broader Reality is Pivot Group Manipulation Based, Spun and Influenced by Social Skepticism

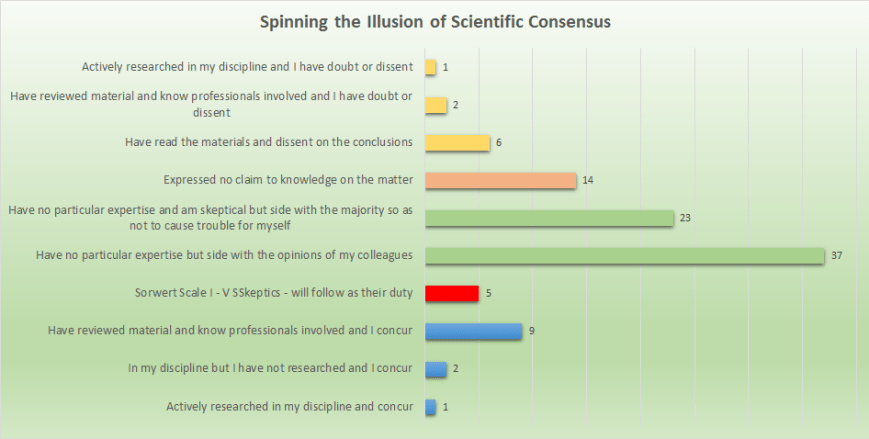

Consider the following hypothetical example histography of scientific opinion regarding a pluralistic (more than one explanatory candidate) issue inside science. Scientists are polled by a single question survey, but allowed to elaborate on their position regarding the issue. Details not shared, about the nature of the opinion are then added. Let’s examine how a fairly even breakout of consensus aligned evenly along the two explanations, can be converted into a 90+ percentile consensus, by manipulating parties with an agenda.

Notice in the example above that even though one can cite a 91% consensus on this data, the true experts on the subject are divided 50/50

Now, in similar fashion Social Skeptics fail to seek true profile statistics for the broader group of scientists, this is certain. Moreover, once the results are in, they bear a nasty habit of grouping the results into spun camps of ‘quasi-correct’ framing. I will say that I have interacted with several hundred engineers and scientists in my years. A similar effect can be drawn out, as in the case of the AGW study above, through discussion with scientists. An effect which will never show in the measure approach outlined by the various undisciplined science association polls discussed above. The broad group of scientists, in my opinion, do not overwhelmingly support every issue as claimed by the Social Skeptic pundits who insist on speaking for science. In the example immediately above, one not too far off the reality I see in my interactions with scientists, were we to actually perform a Borda-style sampling poll, and were we able to actually obtain the pre-Condorcet results shown, Social Skeptics, in similar fashion to the AGW example above, are able to spin the results in twisted ways, hinging on the equivocal uses of the phrases which are ascribed to each results cluster. Notice the pivot group (in green) in the example chart above. It comprises 60 members of a supposed 100 member Borda group. These individuals range from those who really were intimidated into casting a certain vote, to those who just sorta went along because all their colleagues appeared as well to be going along with the vote (perhaps they obtained this impression via other previous non-Borda, non-Condorcet unethical polls). This is known as a Bradley Effect, and is part of the essence of pluralistic ignorance. This is what a Condorcet-Borda method would seek to weed out:

Bradley Effect

/consensus : bias influence : sampled population bias : error/ : the principle wherein a person being polled will, especially in the presence of trial heat or iteration-based polls, tend to answer a poll question with a response which they believe the polling organization or the prevailing social pressure, would suggest they should vote or which will not serve to identify them into the wrong camp on a given issue. The actual sentiment of the polled individual is therefore not actually captured.∝

This is why we like to develop poll after subsequent poll. Previous less scientific polls end up influencing the pivot group in subsequent more scientific polls. Such is the nature of spun deception. Pollsters know this, the general public and larger body of scientists, do not.

Moreover, of critical influence in skewed polls is the group tasked within Social Skepticism to provide the herding incentive to the middle band 60 members which the pivot group comprises. The job of the small minority of Social Skeptics (in red, but in reality WAY less than 5%) is to shout the previous results loudly, and ensure that for any member of the pivot group (green) to vote in dissent, they had better have a damn good reason to do so. They accomplish this by attacking the dissent group, as denialists, and stupid – unworthy of their careers in science. This is not a poll at all. This is a junta election exercised by a constrained group referendum. This is how elections proceeded in Uganda for decades.‡ Junta policing, corrupt enforcers, tyrannical rule in outcomes. This non-expert, all important pivot group, then sort of goes along with the herd, and are included in the 90+% profiles, spouted as victory then by Social Skepticism. Get these people alone, and in an informal setting, and privately they will espouse a slightly different flavor of opinion with you. It is clear that one cannot oppose the will of Social Skepticism, without penalty. This we all bear in mind in processes such as polling. This is a condition called in philosophy of science, pluralistic ignorance.

Pluralistic Ignorance

/philosophy : science : social : consensus : malfeasance/ : most often, a situation in which a majority of scientists and researchers privately reject a norm, but incorrectly assume that most other scientists and researchers accept it, often because of a misleading portrayal of consensus by agenda carrying social skeptics. Therefore they choose to go along with something with which they privately dissent or are neutral.

ad populum – a condition wherein the majority of individuals believe without evidence, either that everyone else assents or that everyone else dissents upon a specific idea.

ad consentum – a self-reinforcing cycle wherein wherein the majority of members in a body believe without evidence, that a certain consensus exists, and they therefore support that idea as consensus as well.

ad immunitatem – a condition wherein the majority of individuals are subject to a risk, however most individuals regard themselves to reside in the not-at-risk group – often because risk is not measured.

ad salutem – a condition wherein a plurality or majority of individuals have suffered an injury, however most individuals regard themselves to reside in the non-injured group – often because they cannot detect such injury.

Once you have ‘documented’ scientific consensus, then free speech to the contrary is not allowed in the Social Skeptic’s world. And after all ‘They are the Science.’

“In so far as scientists speak in one voice, and dissent is not really allowed, then appeal to scientific consensus is the same as an appeal to authority.”

~ kathymuggle, senior member, mothering.com; post Aug 12, 11:15 am; link

This enables the non-Condorcet based analysis to result in equivocal aggregations such as appear in the chart above. The silent and the pivot group are stuffed into the ‘consensus’ herd and credit is claimed. Tally another victory by Social Skepticism in converting a scant 12% proponent base, into a 90+% tyranny. Headlines are then spun accordingly. Note that all this has nothing whatsoever to do with the validity of the science, rather simply the method of claiming victory. It is errant method after all, which true skeptics should decry; simultaneously eschewing the enforcement of answers as a member of the Cabal of intimidation (in red) above. Amazingly, every single issue of plurality can be assembled, digested, misrepresented and then disseminated to the public and body of science at large, in this exact same fashion. The process is indeed, as kathmuggle laments at mothering.com, all simply a game of appeal to authority.

Social Skepticism, through a rush inducing magician’s deception, we can prove anything we want. It is a type of heady power. A junta mentality which goes unrecognized, because we are all just too smart to see it.

¹ Wikipedia, Scientific consensus; http://en.wikipedia.org/wiki/Scientific_consensus.

† Bag, Sabourian, et al., “Multi-stage voting, sequential elimination and Condorcet consistency,” Journal of Economic Theory, Volume 144, Issue 3, May 2009, Pages 1278–1299;

∈ American Association for the Advancement of Science (AAAS) website: http://www.aaas.org/

∋ American Association for the Advancement of Science (AAAS) 1973 AAAS CONSTITUTION (Amended) http://www.aaas.org/aaas-constitution-bylaws

∝ Pew Research, US Survey Research: Election Polling, http://www.pewresearch.org/methodology/u-s-survey-research/election-polling/

[…] Back on topic, I'm re-sharing a good read rebutting the "scientific consensus" canard. http://theethicalskeptic.com/2015/01…fic-consensus/ “It is simply no longer possible to believe much of the clinical research that is […]

A thank you shout out to mothering.com and their quality forum membership for visiting here. I will take the ‘overly loquacious’ advisement into account. :) I fully understand this. But I am a technical writer and the habit is hard to shake after delivering over 1200 reports to the international community. As such I find colloquial descriptions of principles – while much easier to read, often afford too much room for spin and misrepresentation by ill-meaning parties. And believe me, I have run into my share of them. Technical writing is much like legalese… it is purposely precise in fact… Read more »

[…] que ha intentado desenmascarar la corrupción del movimiento seudoescéptico, existe un interesante artículo en el que señala el mito del falso consenso creado por los seudoescépticos. Estos personajes […]